Overview

The

Mocap Promap software is a program I made/am making that does two things, motion capture and projection mapping. This software will combine those

two things to produce something much cooler than either one of those things on their own. An example of motion captured based projection mapping could be

as simple as motion tracking and calibrating points so that the projection mapping is still accurate if the surface or projector is shifted some. A cooler,

and more technical example would be to motion track shapes and designs onto moving objects. You can make a moving ball look like a planet, or project its

movement speed right on the ball. If you are building an arcade style basketball shooting game you could color each ball based on who throws it no matter

which ball it is, and use that with other software to award points within that game. This program approach could also be used to projection map humans or

create interactive art displays by reacting art on the software side to the data provided from the motion capture.

The Hardware

All that is needed is any projector, computer, and camera input. (A higher FPS camera will result in a more accurate projection mapping!)

The Software

As mentioned, the software is broken up into two parts, the motion capture and projection. The motion capture reads camera input and can utilize the GPU (or CPU)

to track movement and send data to the other part of the program. The tracked movement can be hands, fingers, bodies, circles, other shapes, or anything else

additionally programmed in. The data is sent to the projection mapping software which can be used within a game engine such as Unity, Godot, or Unreal Engine.

This data will be passed in with parameters such as position coordinates, sizes/radius, and anything else set from the mocap program. Within these game engines

the motion capture data can be used in any way desired with a game or animation to create awe-inspiring effects.

Download

Currently the two parts of this software is seperated into two separate programs, one for the motion capture and one for the projection mapping like stated. This

way the motion capture data can be used in a variety of different programs for the projection mapping, and isn't limited. I am still trying to bundle them together

a bit better before adding a free download to the programs here, as well as adding better user interface to the Mocap software instead of just using CLI.

[Link to Github repo with source code]

Non-hand Object Tracking

The same way the X and Y coordinates of a hand can be tracked and passed through from Python, other objects can be tracked as well.

I have tested it with ball tracking and have tracked a plain white sphere, and in real time can projection map just onto the surface

of the sphere! The way this works is by taking the X and Y parameters that were passed through previously, and taking in the Z coordinate

as the width of the object on screen (radius). On the OpenCV/Python side of things, this value will reflect the width while in Unity or a

game engine, the width is used as Z-index in a 3D environment (to make objects look larger or smaller based on distance away), or in a 2D

scene it can be passed in as a size as well to affect the 2D projection and restrict it to just the shape in real time!

The Github source code for this will be uploaded soon!

Motion-Capture Projection-Mapping

Motion-Capture Projection-Mapping

Motion-Capture Projection-Mapping

Motion-Capture Projection-Mapping

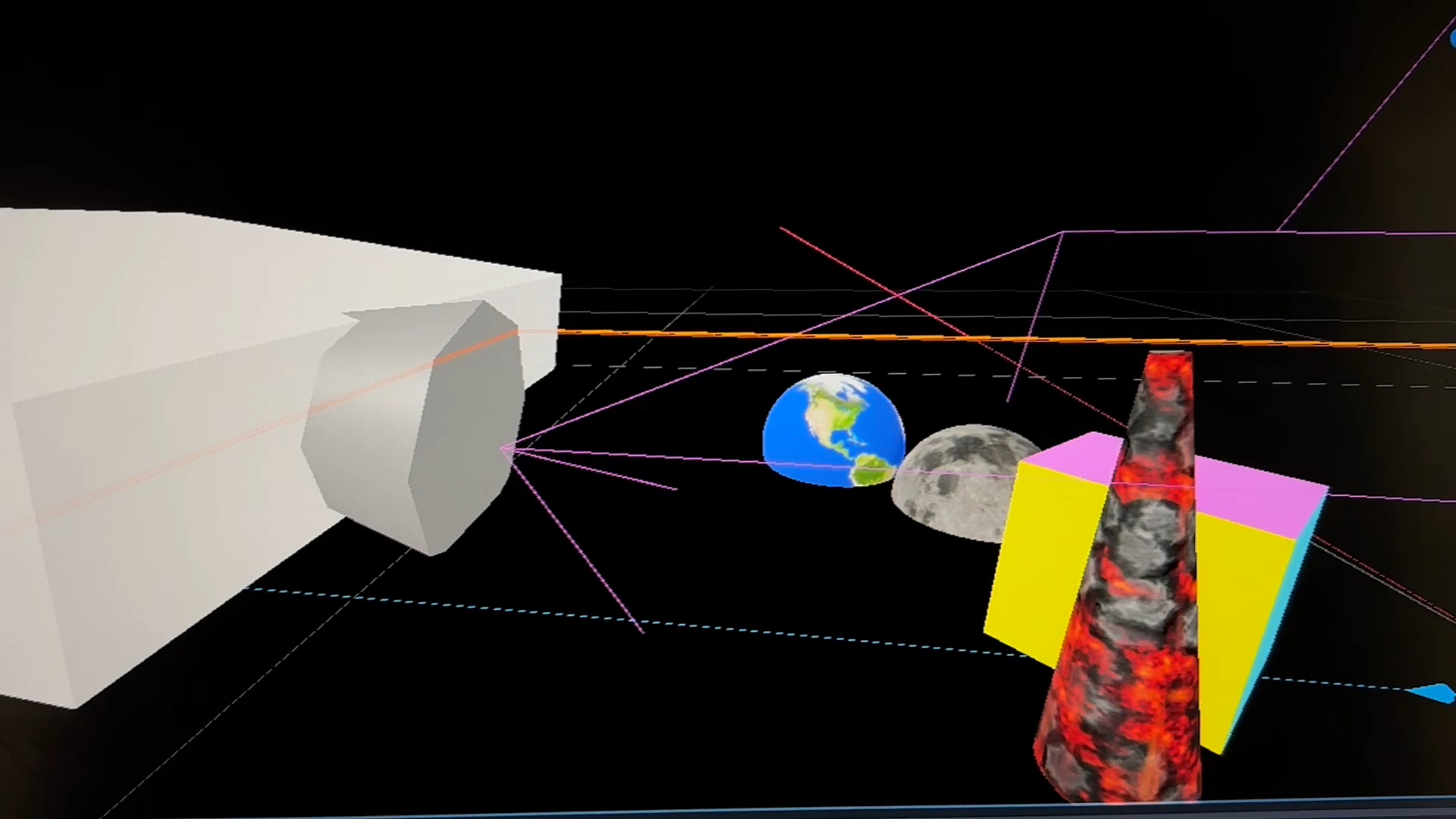

3D scene rendered in 3D engine

3D scene rendered in 3D engine

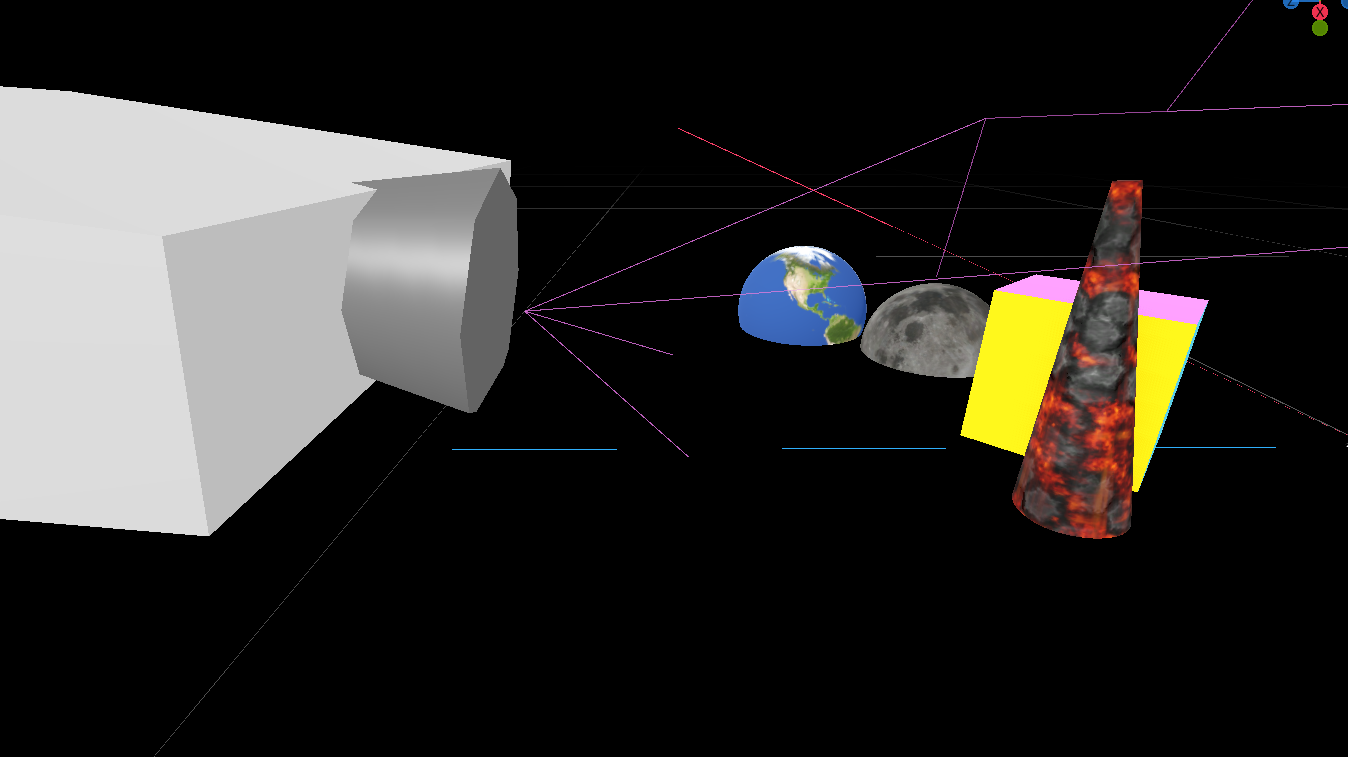

Projection onto 3D objects scene

Projection onto 3D objects scene